Below are steps to configure mirroring for a MS SQL Database, and how to use mirroring database in an effective way:

1. Use SQL Management Studio to check if database is in Full Recovery model. If not, let configure it as Full Recovery.

2. Issue a full backup of the database, for example:

BACKUP DATABASE [DBNAME] TO DISK = 'E:\DBNAME.bak';

3. Issue a transaction log backup of the database, for example:

BACKUP LOG [DBNAME] TO DISK = 'E:\DBNAME.trn'; Restore the full backup to the Mirror server with the NORECOVERY option. You can use SQL Management Studio to do this, or use a command as below:

RESTORE DATABASE [DBNAME] FROM DISK = 'Z:\DBNAME.bak' WITH NORECOVERY

In the case you want to move files on Mirror server to other folder path differing with folder path on Principal server, you should do as the following:

RESTORE DATABASE [DBNAME] FROM DISK = 'Z:\DBNAME.bak' WITH NORECOVERY,

MOVE 'Logical_Data' TO 'C:\NewPath\DBNAME.mdf',

MOVE 'Logical_Log' TO 'C:\NewPath\DBNAME_log.ldf'

You can know Logical_Data and Logical_Log of the database on Principal server by run a command in step 18.

5. In Mirror server, restore log backup also with the NORECOVERY option, for example:

RESTORE LOG [DBNAME] FROM DISK = 'Z:\DBNAME.trn' WITH FILE=1, NORECOVERY

If you have any auto backup log in Principal server, you should turn off it. Otherwise having any additional log backups before you start mirroring, you must also restore all of those log backups, in sequence (FILE=2, FILE=3 etc.), to the mirror server using WITH NORECOVERY.

6. Configure IPs and host name between 2 servers. On each server open file:

C:\Windows\System32\drivers\etc\host with Administrator right then add a line with IP to point the partner host name, for example:

192.168.72.100 PARTNER_HOST_NAME

To know the host name, open CMD then run command hostname, for example:

C:\Users\Administrator>hostname

7. Configure firewall (inbound port) of each server to allow the port used for this mirroring (e.g. 5022)

Can use

PsPing for checking port.

8. Configure to allow principal server access SQL on mirroring server. For example, you open port 1433 for principal server.

9. If server instances (mirroring instance & principal instance) are running under different domain user accounts, you should create a same login user for running these instances. This login user can be a Windows user.

In the case both servers are not running on same domain and your servers' names are not in FQDN, you can skip this step and just do the following steps (10, 11, 12, 13).

10. Create a certificate and an endpoint on Principal server:

USE master

GO

CREATE MASTER KEY ENCRYPTION BY PASSWORD = 'your_password'

GO

CREATE CERTIFICATE Principal_cert

WITH SUBJECT = 'Principal certificate',

START_DATE = '01/01/2015',

EXPIRY_DATE = '01/01/2050'

GO

CREATE ENDPOINT Mirroring

STATE = STARTED

AS TCP (LISTENER_PORT = 5022, LISTENER_IP = ALL)

FOR DATABASE_MIRRORING

(

AUTHENTICATION = CERTIFICATE Principal_cert,

ENCRYPTION = REQUIRED ALGORITHM AES,

ROLE = ALL

)

GO

BACKUP CERTIFICATE Principal_cert

TO FILE = 'Z:\Principal_cert.cer'

GO

11. Create a certificate and an endpoint on Mirror server:

USE master

GO

CREATE MASTER KEY ENCRYPTION BY PASSWORD = 'your_passport'

GO

CREATE CERTIFICATE Mirroring_cert

WITH SUBJECT = 'Mirroring certificate',

START_DATE = '01/01/2015',

EXPIRY_DATE = '01/01/2050'

GO

CREATE ENDPOINT Mirroring

STATE = STARTED

AS TCP (LISTENER_PORT = 5022, LISTENER_IP = ALL)

FOR DATABASE_MIRRORING

(

AUTHENTICATION = CERTIFICATE Mirroring_cert,

ENCRYPTION = REQUIRED ALGORITHM AES,

ROLE = ALL

)

GO

BACKUP CERTIFICATE Mirroring_cert

TO FILE = 'Z:\Mirroring_cert.cer';

GO

12. Create a user login for the endpoint on Principal server:

USE master

GO

CREATE LOGIN Mirroring_login WITH PASSWORD = 'your_password'

GO

CREATE USER Mirroring_user FOR LOGIN Mirroring_login

GO

CREATE CERTIFICATE Mirroring_cert

AUTHORIZATION Mirroring_user

FROM FILE = 'Z:\Mirroring_cert.cer'

GO

GRANT CONNECT ON ENDPOINT::Mirroring TO [Mirroring_login]

GO

13. Create a user login for the endpoint on Mirror server:

USE master

GO

CREATE LOGIN Principal_login WITH PASSWORD = 'your_password'

GO

CREATE USER Principal_user FOR LOGIN Principal_login

GO

CREATE CERTIFICATE Principal_cert

AUTHORIZATION Principal_user

FROM FILE = 'Z:\Principal_cert.cer'

GO

GRANT CONNECT ON ENDPOINT::Mirroring TO [Principal_login]

GO

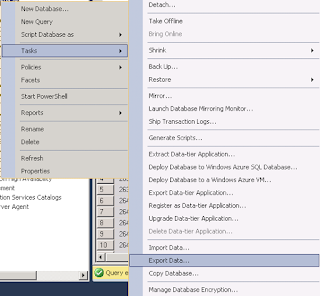

Use SQL Management Studio to set up Mirroring (partners) for your database on Principle server. Then start your mirroring.

For more quickly, you can user the following commands on the mirror then the principal:

USE MASTER

GO

ALTER DATABASE [DBANME] SET PARTNER = 'TCP://PrincipalServer:5022'

GO

USE MASTER

GO

ALTER DATABASE [DBNAME] SET PARTNER = 'TCP://MirrorServer:5022'

GO

If you see the status "Synchronized", that means everything goes well. If not, the status may be "Suspended". In that status, you need to clean on mirrored server for getting more space then click Resume button here to start the mirroring again.

15. In case of you removed the mirroring for maintaining / cleaning DB on principal, after removing mirorring you can do step 2, 3, 4, 5 to update new DB to the mirror server. Then you can start the mirroring again by one of the following ways:

15.1 Start the mirroring session again by running below commands:

First on the mirror:

ALTER DATABASE [DBNAME] SET PARTNER OFF

ALTER DATABASE [DBNAME] SET PARTNER = N'TCP://PrincipalServer:5022'

Then on the principal:

ALTER DATABASE [DBNAME] SET PARTNER = N'TCP://MirrorServer:5022'

15.2 Recreate ENDPOINT for the mirroring, let drop it on Principal server and create again.

DROP ENDPOINT Mirroring

CREATE ENDPOINT Mirroring

STATE = STARTED

AS TCP (LISTENER_PORT = 5022, LISTENER_IP = ALL)

FOR DATABASE_MIRRORING

(

AUTHENTICATION = CERTIFICATE Principal_cert,

ENCRYPTION = REQUIRED ALGORITHM AES,

ROLE = ALL

)

GRANT CONNECT ON ENDPOINT::Mirroring TO [Mirroring_login]

On Mirror server, we can do nothing.

To check existing certificates, run:

SELECT * FROM sys.certificates

15.3 If you want to redo all, let drop all on each server then do above steps again.

USE master

DROP ENDPOINT Mirroring

DROP CERTIFICATE Principal_cert

DROP CERTIFICATE Mirroring_cert

DROP LOGIN [login]

DROP USER [user]

DROP MASTER KEY

GO

16. To check whether a server instance has a database mirroring endpoint and to learn its role and state, on that instance, use the following Transact-SQL statement:

SELECT * FROM sys.database_mirroring_endpoints

SELECT role_desc, state_desc FROM sys.database_mirroring_endpoints

or check state, stop and start:

SELECT * FROM sys.endpoints

ALTER ENDPOINT endpoint_name STATE = STOPPED

ALTER ENDPOINT endpoint_name STATE = STARTED

17. To manually bring mirroring database online after principal database is down use below commands:

ALTER DATABASE [DBNAME] SET PARTNER OFF

RESTORE DATABASE [DBNAME] WITH RECOVERY

18. To use mirroring database any time (e.g. for your reports), let create snapshot of mirroring database then use it as an independent database.

Firstly, you need know the Logical Name of your mirroring database, run:

SELECT name AS LogicalName, type_desc, physical_name

FROM sys.master_files

WHERE DB_NAME(database_id) = 'DBNAME'

Then create the snapshot:

CREATE DATABASE [DBNAME_SNAPSHOT_NAME] ON --

(

NAME = 'LogicalName' , FILENAME = 'Z:\DBNAME_SNAPSHOT_NAME.ss' -- replace with the Logical Name above

)

AS SNAPSHOT OF [DBNAME]

19. Let create full log backup for principal database for every 15 minutes, if not the log of principal database will increase quickly. In the case of the disk is getting full and you want to shrink log file to free unused space, let restart principal server then run Shrink log file.

That's all. Bye! Any comments is welcome.